We often talk about computer programs purely as collections of “code”. Lately I’ve been thinking about it a bit differently: a computer program is the encoding of a huge series of nested decisions, made intentionally or otherwise.

Let’s say we’ve decided to build a calculator app. That overall goal is the first decision, but there are many more decisions nested underneath it.

- What features does our calculator app need? (Just basic arithmetic or are we buliding a graphing calculator?)

- What platform are we building for? (web, mobile, desktop, VR?)

Once we know generally what we’re trying to do, there’s still a bunch of fundamental decisions to be made before we write any code

- Is this a client only app or is there a server component?

- What framework should we use?

- How will the app be hosted and distributed?

Then once we start actually writing code, we find even more nested decisions.

- How are we going to structure our files?

- How will we break functionality up into components, classes and/or functions?

- Is this a single file, a single app, or some sort of distributed system?

And then even within a given class we nest even more decisions

- What functionality should the class have?

- What is the high level logic of each function?

And then we come down to the lowest level of decisions

- What syntax will we use for that? (For example: for loops or iterators to run through a list)

- Will we use a library or write some functionality from scratch?

- Tabs or spaces? Single quotes or double quotes?

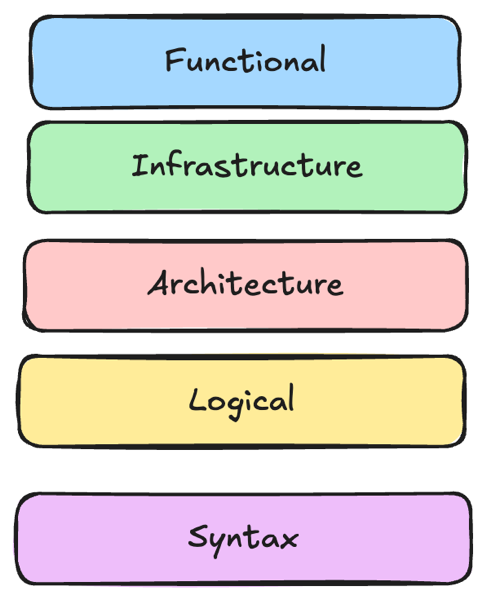

We can think of these as functional, infrastructure, architecture, logical and syntax level decisions.

The history of computing has mostly involved changing which of those decisions a software developer needs to make as tools have changed. Many of those changes have removed the need to make decisions in favor of a generally acceptable standard implementation. The vast majority of programmers no longer worry about the details of the bytecode or assembly definitions of programs — those have been standardized by higher level languages. Most modern languages also remove or significantly reduce decision making around memory management, cloud platforms reduce1 the decisions many devs need to make around infrastructure for their apps, and API frameworks like Django or Ruby on Rails reduce the decisions somebody needs to make about how to structure their API code.

Each of these abstractions have allowed developers to focus more on the decisions around what their program should do and less on the implementation details of how it does it. They’re also all leaky abstractions to varying degrees, and there are times and situations where a developer will need to go without or work around the standard tool to make a different decision.

Applying this model to think about LLM based coding tools, it’s useful to talk about the use of LLM in terms of what decisions we’re making vs offloading to the coding tool. LLMs are essentially tools for replacing decisions that you might have had to make while generating text with a reasonable standardized option. However the way we use them can lead to different sets of decisions being made by the tools.

Vibe Coding

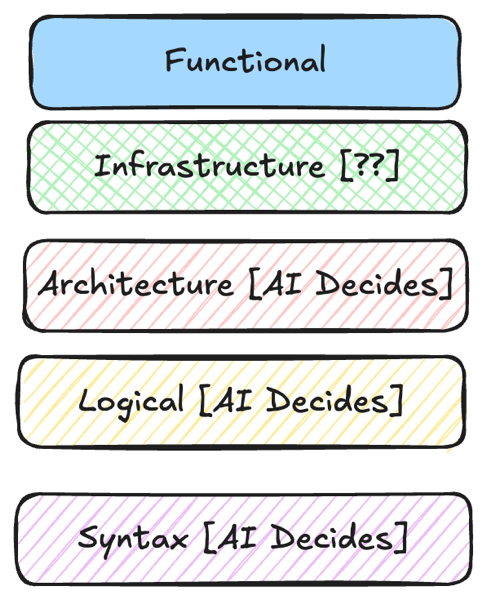

Vibe coding, the buzzword of the year in tech, is commonly understood to mean that you’re creating an application solely through prompting and iterating based on the observed output without reading the code. This is effectively choosing to make decisions at the functional level (and maybe the infra level) and let AI handle the rest.

Tab Complete

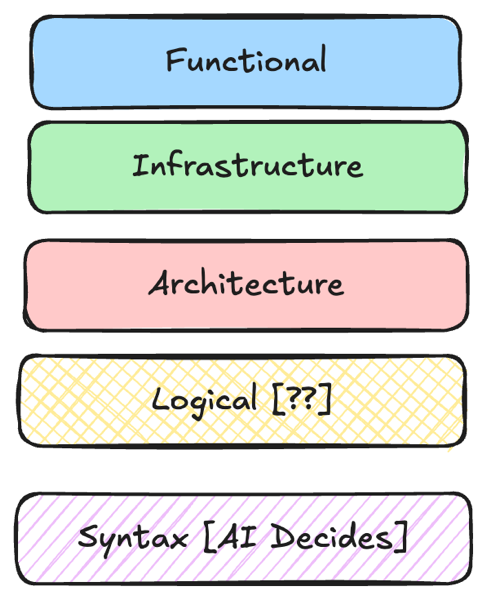

We can contrast this to a tab complete interface like those provided by Github Copilot or Cursor, where an IDE will offer to insert a chunk of code based on context. Here AI is mostly deciding on syntax, with more ambitious implementations like Cursor possibly moving up to the logical level

Feature Development With Agents

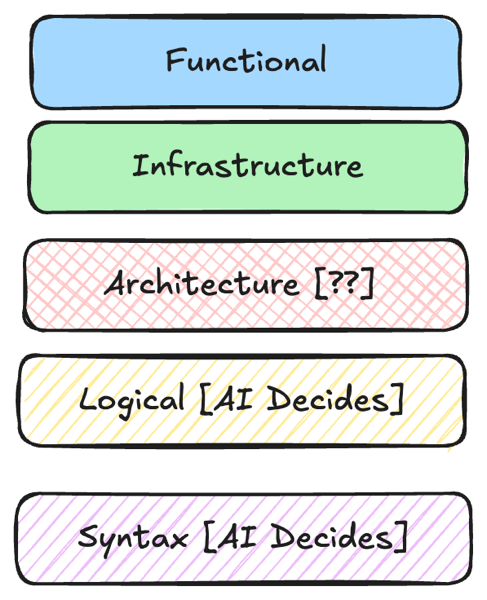

There’s a lot of room between these 2 extremes. Tools like Claude code, Cursor and Copilot can take on tasks at a variety of levels depending on how they’re prompted. You can ask a coding agent to generate a new feature for instance, which might live completely at the logical / syntax level or could end up infringing on architecture if the feature has new architectural considerations or the coding agent introduces a new pattern or dependency.

Who decides what?

As I’m personally moving back into more of a heavy coding role this month, I find this framework of who is making decisions helpful.

While building enterprise software at work, I’m ultimately responsible for the quality of all the code I write, so while I find it helpful to move faster by using AI tools to get a rough solution in place, I encode some decisions through the prompts I give and end up reviewing and tweaking everything to make sure what comes out of PRs I open represents my decisions. I’m also quickly building an intuition for which tasks a coding agent is likely to produce a useful output for that I need to tweak, vs one where I’m unlikely enough to agree with the decisions that its faster to just write it myself2.

On the other hand if I want to experiment with something quick and dirty or prototype a concept, the freedom these tools give to ignore every level below the functional one is wonderful (I just don’t want to maintain such a system).

Working with these tools has reminded me once again that reality has a surprising amount of detail. There is no magic in the world and decisions are always going to have to be made. If coding tools help us to go faster by focusing more on the decisions that really matter, I’m excited to see what they help us bulid.

-

Ok given the huge ecosystem of choices and configuration in cloud computing its probably more accurate to say that it changes the decisions devs need to make ↩︎

-

Given the rapid evolution of the tools of course, this is something that needs to be checked over time. Claude Sonnet can do things reliably that Chat GPT3.5 would consistently fail on, and the agentic system seems to have a good deal of impact on quality as well. ↩︎